AI Governance and the EU AI Act: What Organisations Need to Know

by Sypher | Published in Resources

Did you know that 42% of employees now use generative AI, up from 26% in 2024 - and a third admit to using it in secret? This surge in adoption is just one of the reasons why organisations cannot afford to ignore the EU AI Act.

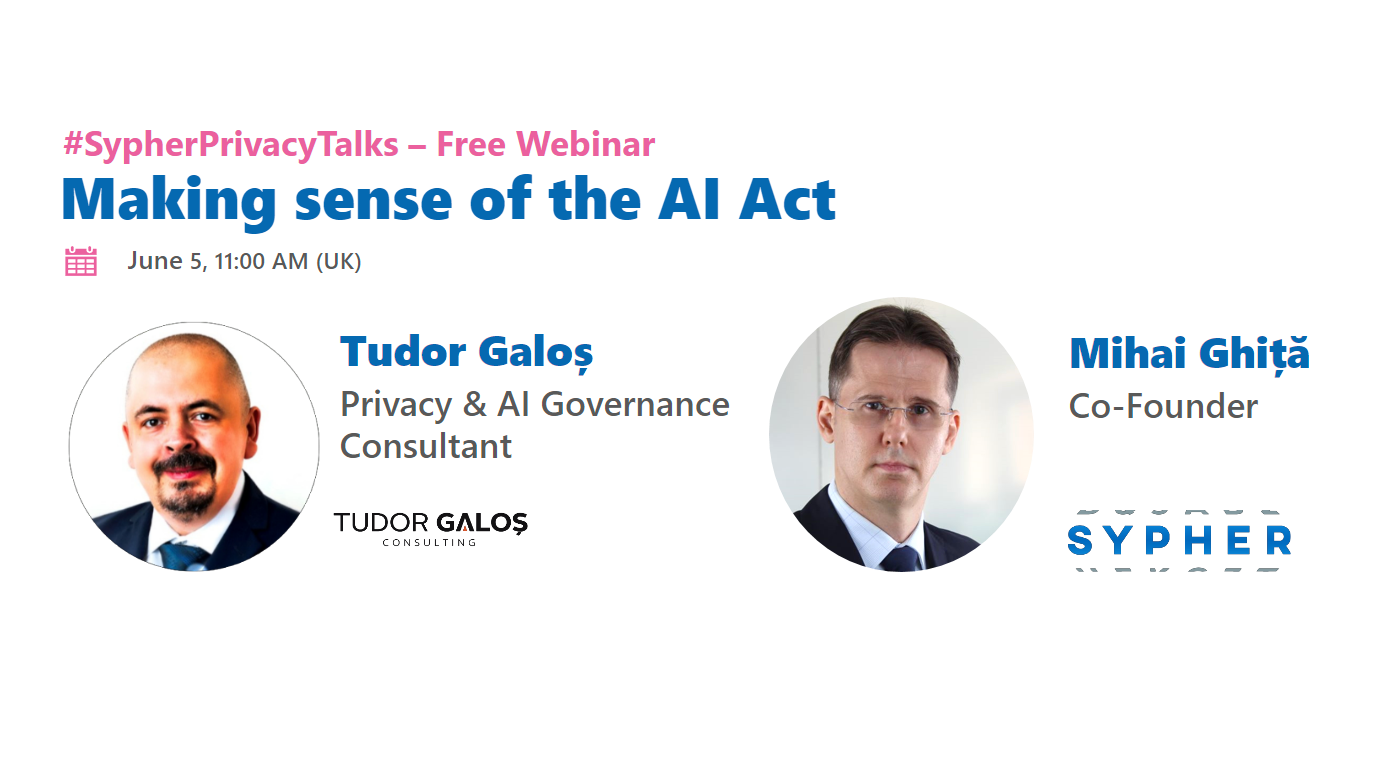

“Most people don’t understand how serious this is,” warned Tudor Galos, AI Governance and Data Protection Consultant, during a Sypher webinar on AI governance. “While AI usage is omnipresent, a big problem is data privacy and sharing confidential information – it’s very easy to cause a data breach without knowing.”

The EU AI Act has wide-reaching implications

The EU AI Act applies broadly to various roles including:

- Providers (creators of AI systems)

- Deployers (companies using AI)

- Importers, distributors and authorized representatives within the EU

- Product manufacturers embedding AI

- Users, named “affected persons” residing in the EU (what we call “data subjects” in GDPR)

Biggest challenges in implementing the EU AI Act

- Chaotic AI usage: With employees experimenting with AI tools in secret, organisations risk exposure without realising it.

- Fast time to impact: AI can cause harm in minutes, not days, requiring rapid oversight and escalation procedures.

- Complex obligations: Roles and responsibilities differ depending on whether you’re a provider, deployer, or manufacturer - and roles can change depending on use cases and how the code is manipulated.

- Transparency requirements: In the case of generative AI, disclosing deepfakes will be difficult to enforce consistently.

- Open-source models: Many organisations use large language models locally, often without clarity on whether they count as providers or deployers.

- Regulatory uncertainty: While industries such as pharma, banking, and insurance are leading the way in AI governance, most others are lagging. Clarity on documentation and enforcement is still evolving.

Key compliance steps for companies in 2025

The first two chapters in the EU AI Act are now applicable! To prepare, organisations need to:

- Ensure AI literacy among employees

(Article 4 of the Act). Every employee must get training and understand how to use AI responsibly, what risks they introduce, and the consequences of AI use, especially regarding confidential and personal data.

"There are many papers around the requirements of AI literacy, but common sense is to have at least a training for everybody, all employees, including blue collar and white collar, on the use of AI. Because many of them are using AI and they are bringing the responsibility of the company in their use of it without even knowing” Tudor Galos.

- Conduct risk assessments

Obligations depend on the risk level of the AI system:

-

Providers must perform conformity assessments, outlining also how the AI can be used safely, and (once available) obtain CE certification for AI products.

-

Deployers of high-risk AI systems must conduct fundamental rights impact assessments (e.g. the right to work, get married, hold a name, etc.) and data protection impact assessments (DPIAs). They must also implement hard (technical) and soft (organisational) controls, enable human oversight (which applies in certain conditions), and maintain risk management documentation.

>> Check out this guide on DPIA - how to do it and when to consult with the supervisory authority. -

Lower-risk use cases such as open source LLMs deployed on local servers may fall outside the Act, but risk assessments are still essential to confirm compliance status.

“On the deployer side, if you have a high-risk AI system, you need to perform a fundamental rights impact assessment and a data protection impact assessment (DPIA), because not only EU AI Act applies, but also GDPR” Tudor Galos.

Tip: Factor in AI’s “fast time to impact”. Set up monitoring and SLAs that enable human intervention within minutes or hours - not days, ensuring someone can step in immediately if an AI system causes harm.

-

- Maintain proper documentation to demonstrate compliance

Like GDPR’s ROPA, the AI Act requires organisations to document how AI is used. Maintaining an AI system inventory is critical.

What to include in your AI inventory (very similar to GDPR’s ROPA):

- Capture AI use across departments

- Document where data is stored (very important!)

- The categories of data processed, and the purpose of processing

- Include embedded AI components, not just standalone applications

“You should have the AI inventory immediately available, just like in GDPR. Do the risk assessments today, proactively, at least the inventory. Know exactly what applications you have in the organization. You might be surprised to find how many AIs you already have in your organization, especially if you use, for example, cloud services” Tudor Galos.

Expanding your existing ROPA to cover AI use is a practical way to meet these obligations without starting from scratch.

It is recommended to retain AI inventory records for at least three years after decommissioning a system, to demonstrate compliance and due diligence.

“Something like Sypher is great. Because Sypher is doing a great job in documenting the ROPA and the AI inventory is very similar to ROPA. You only need to change a few items and there you have it” Tudor Galos.

The EU AI Act and GDPR

The EU AI Act complements GDPR by focusing on AI system quality and fundamental rights, while GDPR centres on personal data protection. Both require risk assessments and ongoing compliance. GDPR is a primary law, while the AI Act functions more like a product law.

“If you’re genuinely looking after the rights of your employees, customers, partners, you’re already 80-90% compliant to both GDPR and EU AI Act,” said Tudor Galos.

The AI Act compliance is expected to evolve, with AI governance roles increasingly merging with those of data protection officers. What’s clear is that the EU is taking a protective stance on citizens’ rights in AI deployment - and organisations must be proactive in managing AI risks and compliance.

Want to dive deeper?